Why do you need it

In very broad commercial projects, It is obvious that developers testers, and other operational team members required to set up end-to-end application stacks to carry out their assigned tasks. These application stacks usually include various different technologies like web servers, databases, messaging systems, and etc. Practically there are lots of issues developing, testing, and deploying these application stacks with all these different components. First of all their capability with the underline OS can be really annoying when certain versions of services, libraries, and dependencies become incompatible with it. To get the application stack running smoothly, it is required to look at different OSs which compatible with all of the different services.

Similarly, there are situations like one service requires a particular version of a dependant library when another service requires a different version of the same library to run without errors. Also, components need to be upgraded to newer versions when the architecture of an application change over time. It results in going through the same process of checking compatibility within the various components and the underlying infrastructure.

Furthermore, Every new developer onboard follows a large set of instructions and hundreds of commands to set up their environment and still end up with a mess. This becomes too complex when managing different development, test, and production environments. It’s hard to guarantee the application will run in the same way in different environments since developers comfortable with different OS.

All of these reasons cause severe delays in various stages of the development cycle and to ship our products on time

What can it do

Platforms that provide different kinds of services suffered from not having a way to overcome these compatibility issues. Changing or modifying components without affecting other components and even without modifying the underlying OS as required is a challenge that every platform has to face when it grows up. There the Docker comes into action. Docker can run each component in a separate container with its own dependencies and its own libraries. What special here is all this happens on the same VM and the OS but within separate environments or containers. When a container has built by a Docker configuration once, all developers could get started with a simple Docker run command.

What are Containers

Containers are completely isolated environments as in they can have their own processes, or services, their own network interfaces, own mounts just like VMs exact. They all share the same OS kernel. Containers are not new to Docker. The concept of the containers first emerged during the development of Unix V7 in 1979. Later on FreeBSD Jails, Linux VServer, Solaris Containers, OpenVZ, LXC &, etc., came up with the concept of containers. Docker also used LXC in its initial stages and later replaced that container manager with its own library, libcontainer. Docker separated itself by offering an entire ecosystem for container management and making it a lot easier for end-users.

OS

Usually, Operating systems consist of two things, an OS kernel and a set of software. The OS kernel is responsible for interacting with the underlying hardware. For operating systems like ubuntu, fedora & centos, the kernel is Linux. What makes the difference here is using different user interfaces, compilers, file managers, etc.

Sharing Kernel

Docker containers share the underline kernel. As an example, let’s say Docker installed on top of centos OS; So the Docker can run any favor of OS like Fedora, Ubuntu, and Debian on top of it as long as they are based on the same kernel. Each Docker container only has additional software that makes this OS different and Docker utilizes the underlying kernel of the Docker host to work with all the OS above.

What is about an OS that does not share the same kernel as above?. Windows!?. You cannot run windows based Docker container on a Docker host with a Linux kernel. for that, you require a Docker on a windows server. But this is not true vise versa.

Anyhow, someone can think of this as a disadvantage. But unlike hypervisors, running OSs that use different kernels is something beyond the definition or concept of the containers. Docker is not meant to virtualize and run different operating systems and kernels on the same hardware. The main purpose of Docker is packaging and containerizing applications to ship them and run them anywhere anytime as many times as you want. This confusion can be resolved by understanding the difference between VMs and containers.

Containers vs VMs

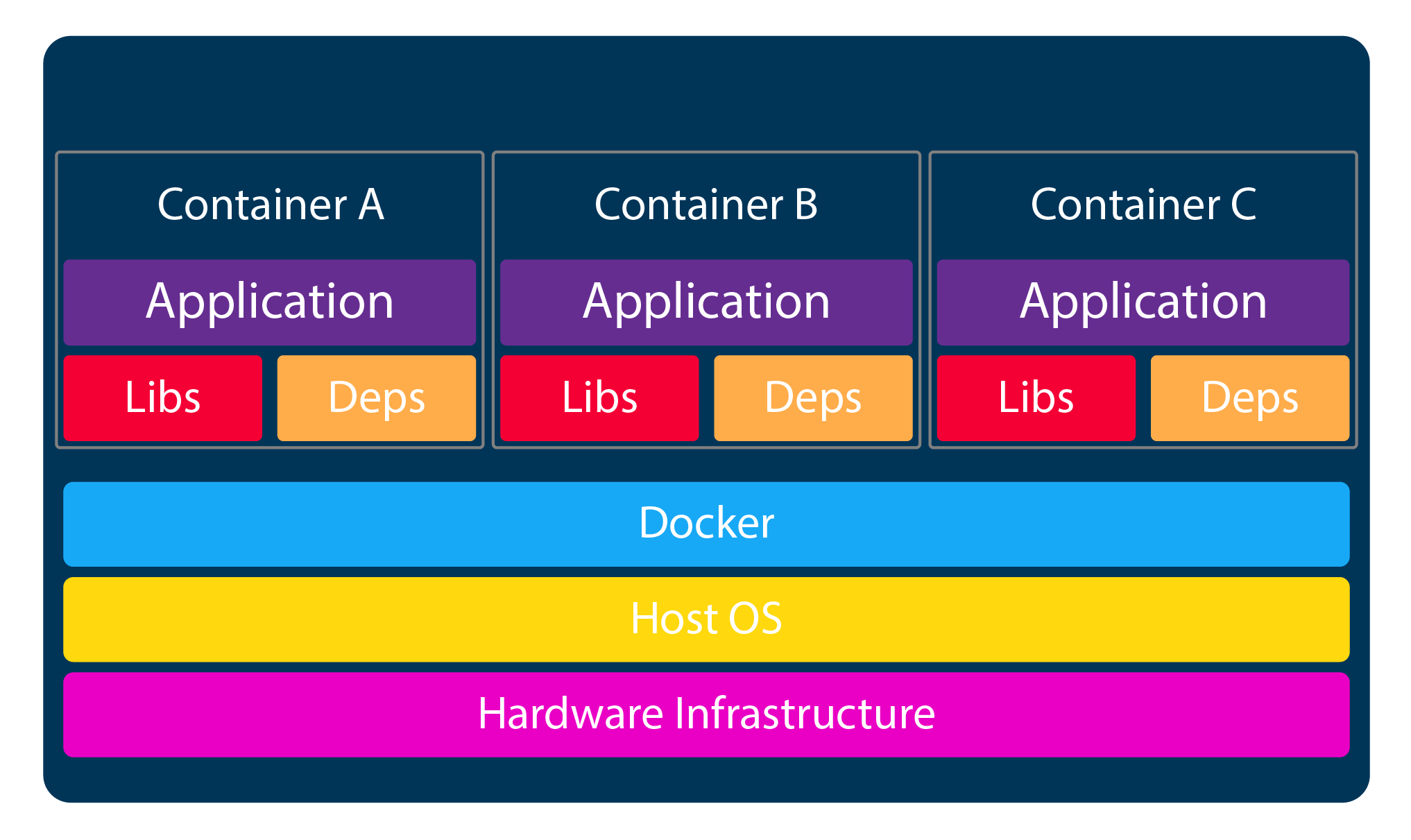

In the case of Docker, we have the underlying hardware infrastructure, OS, and then the Docker installed on the OS. Docker then manages the containers that run with libraries and dependencies alone.

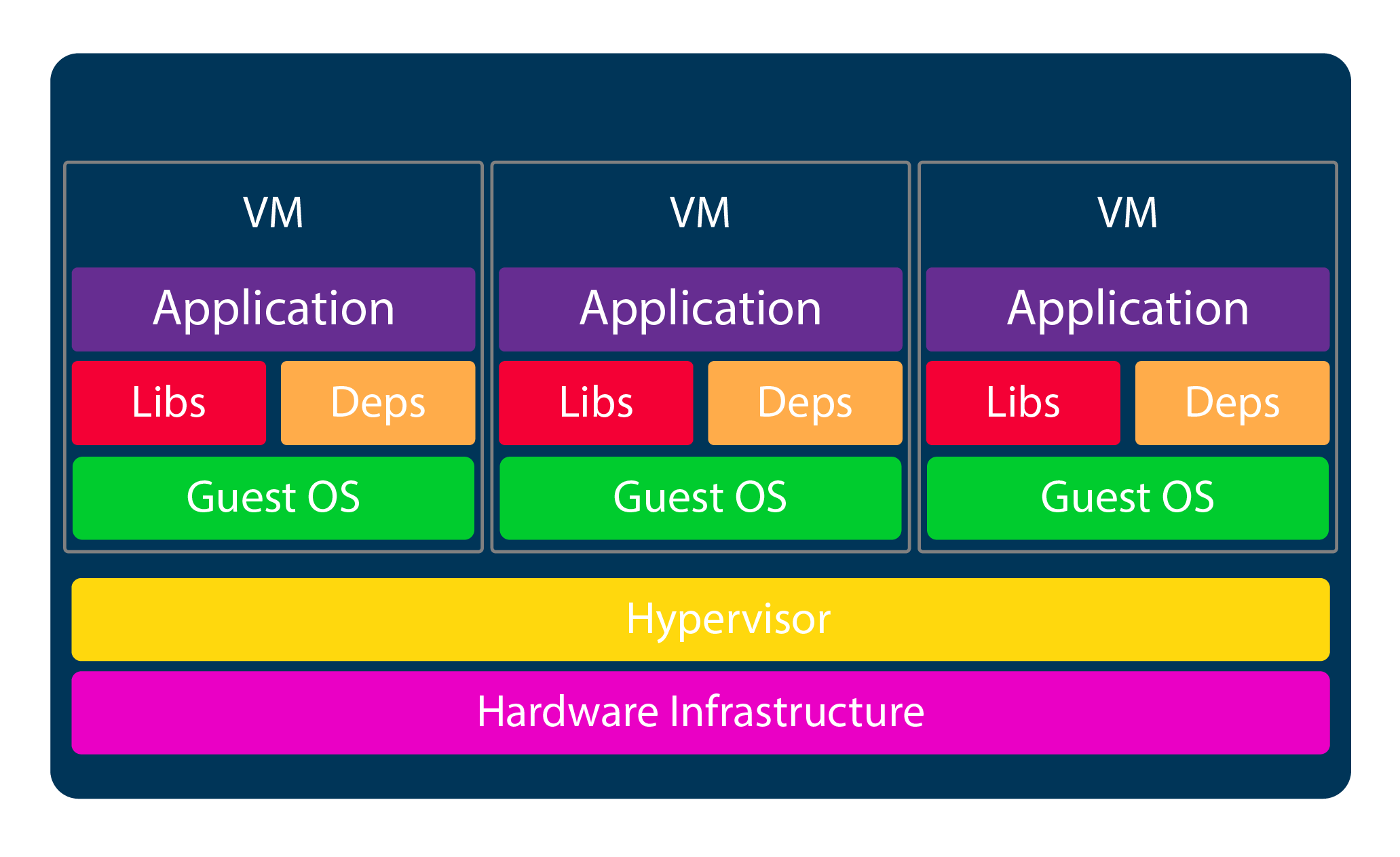

In the case of VMs, we have a hypervisor like ESXi, Hyper-V, Xen, RHEV, and KVM on the hardware and then VMs on it.

As you can see, each VM has its own OS inside it then the dependencies, and then the application.

Overhead processes, higher utilization of underlying resources, and running multiple OS and kernels are some disadvantages of using VMs to maintain different environments. Usually, VMs consume higher disk space like in gigabytes for each whereas Docker containers are lightweight and use disk space in megabytes size.

This allows Docker containers to boot up faster and usually in a matter of seconds where VMs take minutes to boot up as it needs to boot up the entire OS.

It is also important to know that Docker has less isolation and as more resources are shared between the containers like kernel whereas VMs has complete isolation from each other. VMs doesn’t rely on the underlying OS or Kernel. VMs can run different types of applications built on different OSs using the same hypervisor. (i.e.; Linux-based and Windows-based)

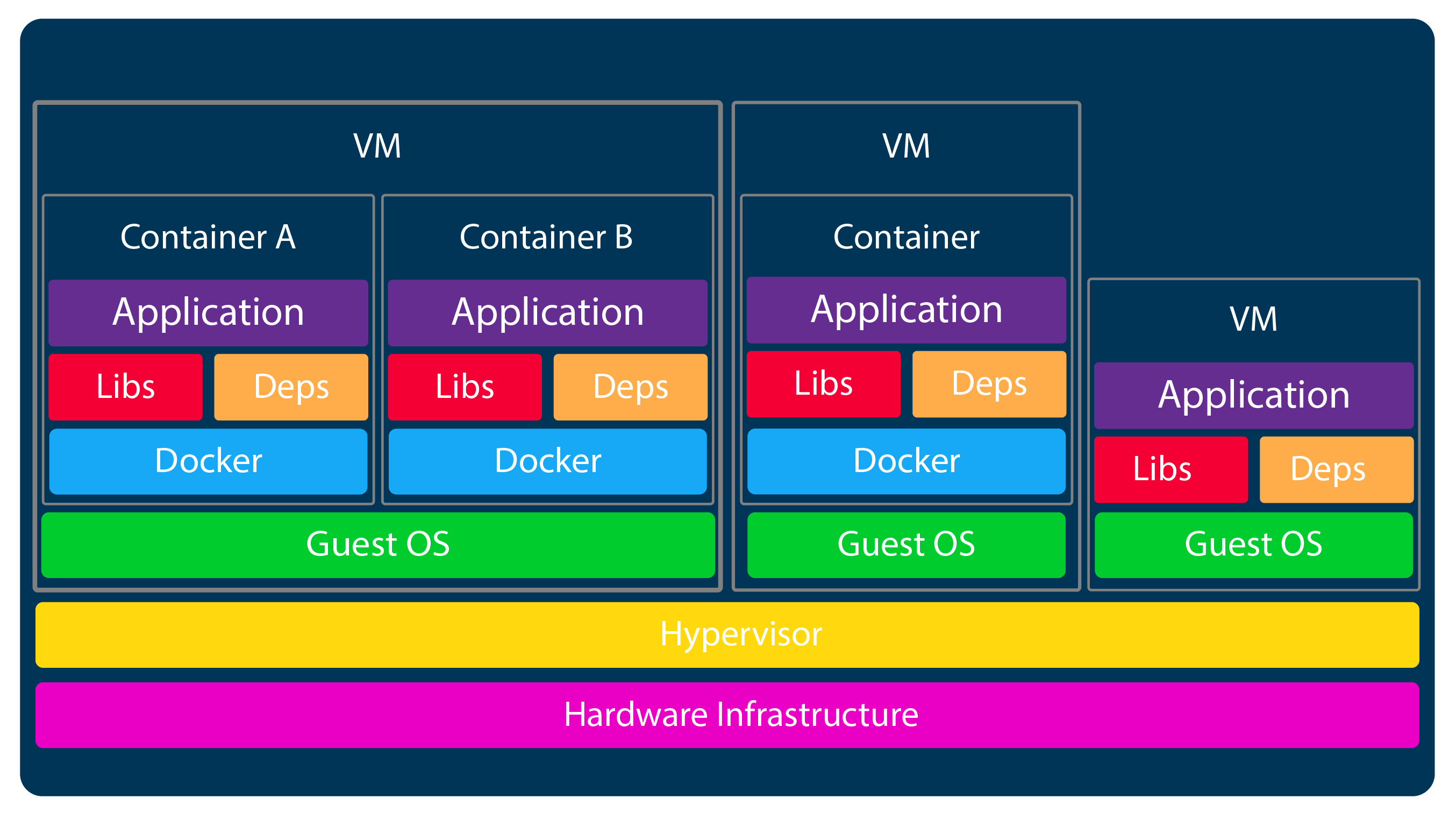

When it comes to the larger-scale environments with thousands of applications, It is not about either containers or VMs situation. It’s containers and VMs. This way we can take advantage of both technologies. We can use the benefits of virtualization to easily provision Docker hosts as required. At the same time, it is possible to make use of the benefits of Docker. Such as easily provisioning the applications and quickly dealing with them as required.

What is Docker?

How it Works

There are lots of containerized versions of applications readily available as of today. So most of the organizations have their products containerized and available in a public Docker repository called Docker Hub or Docker Store. For example, you can find images of the most common OSs, databases, and other services and tools in the Docker hub. Once the required images identified and the Docker installed on your host, bringing up the application is as easy as running the Docker run command with the name of the image.

Containers Vs image

An image is a package or a template just like your VM template that you might work with within the virtualization world. It is used to create one or more containers. Containers are running instances of images that are isolated and have their own environment and set of processes. As we have seen before, a lot of products have been dockerized already. In case of not being able to find the image as required, it is possible to create an image and push it to the Docker hub repository making it available for the public.

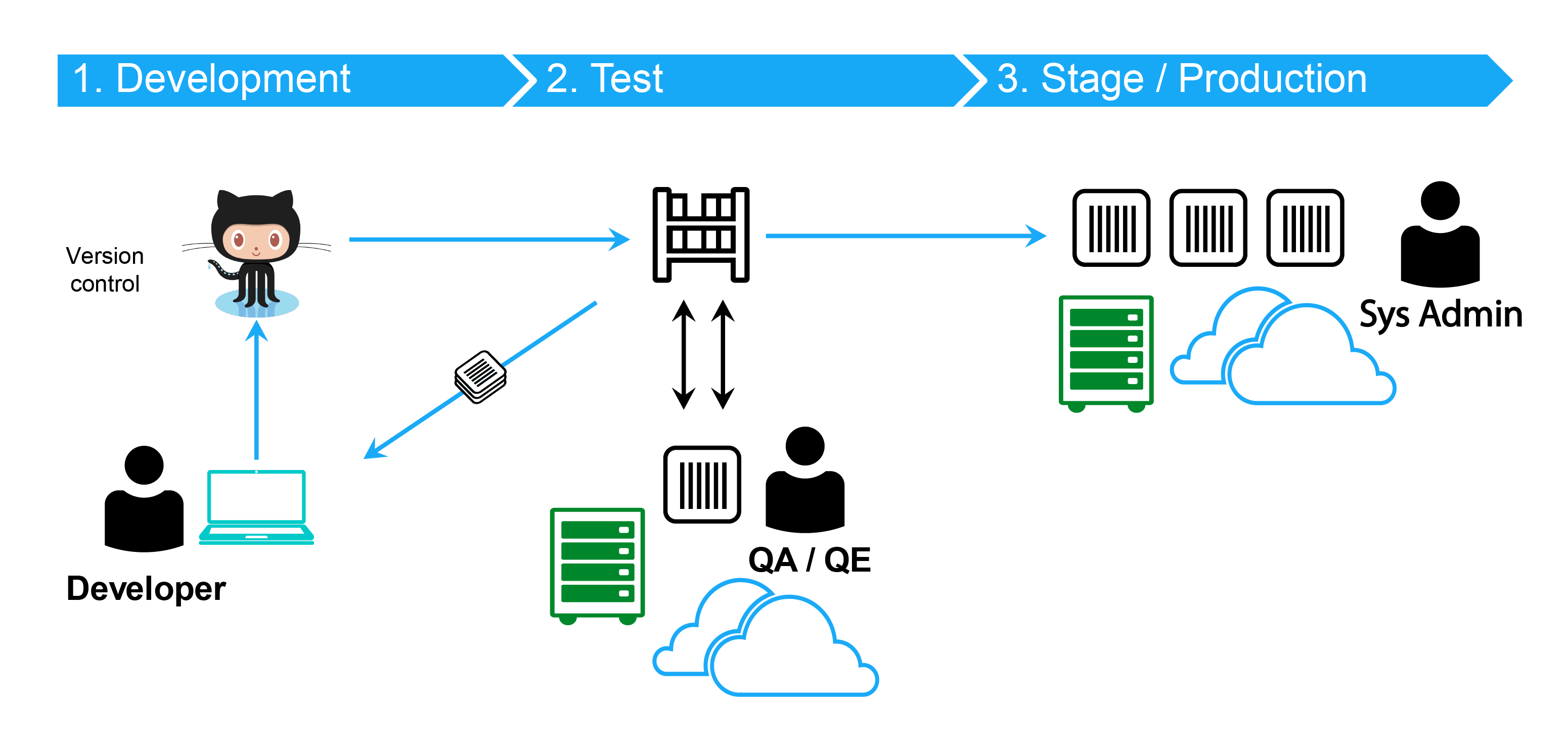

Docker in DevOps

Traditionally developers develop the applications, then they hand them over to the operations team to deploy and manage them in production environments. They do that by provisioning a set of instructions such as how the host needs to be set up, how the dependencies are configured and etc.

Since the operations team did not really develop the application on their own, they struggle with setting it up. When they come up with an issue, they have to contact the developers to resolve them. With Docker, the developers and operations teams work hand in hand to transform a guide into a Docker file with both of their requirements.

This Docker file is then used to create an image for their applications. The created image can now run on any host with Docker installed on it. And it guarantees to run the same way everywhere. That is one example of how Docker contributes to the DevOps culture.

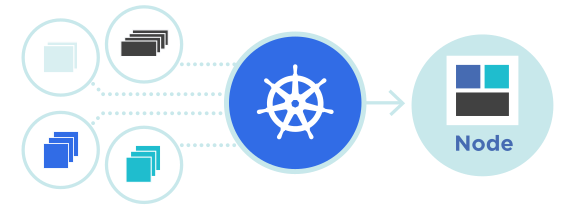

Docker Vs Kubernetes

Docker able to run a single instance of an application using the docker CLI by just executing the docker run command. It brings running an application to a whole new level as it never been much easier before. Kubernetes CLI which is also known as kubectl (Kube control) allows running a thousand instances of the same application with a single command. Also scaling it up is as easy as running another single command. This can perform automatically so that the instances and the infrastructure itself can scale it up and down based on user load. If something goes wrong, there is a feature called Rolling Update to handle the situation and roll back the images.

Kubernetes helps to test new features of the applications by just applying them to a percentage of instances in the cluster through A/B testing methods. Kubernetes open architecture provides support for many network and storage vendors. Many network or storage brand has a plugin for Kubernetes. Kubernetes has a variety of authentications and authorization mechanisms that all major cloud service providers have native support.

Hereby it is important to understand the what the relationship between Docker and Kubernetes. Kubernetes uses Docker host to host applications in the form of Docker containers. But it does not need to be the docker all the time; Kubernetes supports other alternatives like Containerd and CRI-O.